Seishi Takamura, FIEEE, NTT Media Intelligence Laboratories

Image/Video compression overview

Almost every day every moment we are enjoying watching video, teleconferencing, taking/taken video/photo, posting/sending them, and looking at pictures via smartphones, TV, PC etc. More and more amount of data has been transferred over the internet. The visual data in Internet protocol (IP) traffic has been reported that IP video traffic accounted for 75% of all worldwide IP traffic in 2017 and will be 82% by 2022 [1]. Those traffic are mostly occupied by already compressed video (not raw data captured by sensors). Image/video coding, which is the same as compression, has been researched nearly a century. Its incunabulum dates back to the creation of AT&T Bell-Lab’s first videophone system, called Ikonophone, in 1927 [2]. An analog television standard, NTSC, was standardized in 1941 and TV broadcasting had spread over the world.

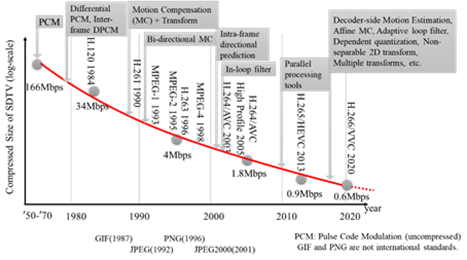

Later, digital video representation system emerged in 1970s, whose (at the time) huge data amount naturally required compression. CCITT (current ITU-T) created the first video coding standard, H.120, in 1984, and succeeded by H.261 in 1990. In 1992, the first and still widely used image coding standard, ISO/IEC 10918 JPEG was created. ISO/IEC JTC 1/SC 29/WG 11(MPEG) created MPEG-1 (used by video CD etc.) [3], MPEG-2 (with ITU-T, widely used by DVD, HDD recorders, digital broadcasting etc.), MPEG-4, and MPEG-4 Advanced Video Coding (AVC, with ITU-T, currently the most widely used coding scheme, by Blu-ray disks, digital broadcasting etc.) in 1993, 1995, 1998 and 2003 respectively. In 2013, MPEG-H High Efficiency Video Coding (HEVC) was created, and its 3D video extensions were created in 2015. It has been used by ultra-high definition (UHD) TV, etc. Very recently, latest video coding standard, MPEG-I Versatile Video Coding (VVC) [3] was created in July 2020.

Roughly, compression performance has been doubled each time major video coding standard is established before HEVC, and steadily improving over the years (Fig. 1). VVC (with reference software VTM10.0) achieves 25% less bitrate for still image and 30-36% less bitrate for video compared to preceding standard HEVC (with reference software HM16.2). Besides its high coding efficiency, VVC is also capable of various functionalities such as screen content (non-camera-captured contents such as PC screen or gaming) coding, adaptive resolution change, spatial scalability, tile-based streaming and 360-degree video coding. Though VVC runs x6~x24 slower than HEVC, decoding is not very slow (less than x2). Recently, very fast and efficient software implementation of VVC codec is developed, which provides x100 faster encoding than VTM while maintaining even better subjective quality [4].

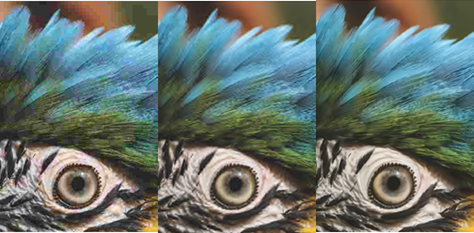

Visual image compression performance comparison between coding standards JPEG, JPEG2000 and VVC are shown in Fig. 2. They are all compressed down to 1/100 of original size. If you take a closer look, the differences are obvious. In JPEG, both background and feather area have blocking artifacts and pseudo color distortion around bird’s eye is observed. JPEG2000 still suffers from feather detail, while VVC has no obvious impairment.

Organizing Increasing IoT data

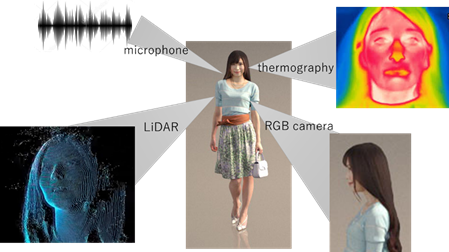

Multi-modal IoT sensors (such as camera, microphone, thermometer, LiDAR, hygrometer, etc.) are expected to be deployed around the world to collect omni-ambient data, which will make various applications possible. For example, weather forecasting, disaster prevention, smart cities, surveillance, security, intelligent transport systems, infrastructure maintenance, etc. It has been reported that the growth in storage devices is expected to increase 10-fold per decade, which is estimated to reach 100 zettabytes (ZB) (1 ZB = 1021 bytes) by 2030 and 1 yottabyte (YB) (1 YB = 1024 bytes) by 2040. However, the growth of IoT data is expected to increase 40-fold per decade, which is estimated to reach 1 YB by 2030 and 40 YB by 2040 [5]. That literally means most of obtained data shall be abandoned without being recorded. It is easily expected that visual data occupies most of the data amount, so undoubtedly VVC will be a killer compression tool. However, since it is single-modal (i.e., only visual) oriented, still it deserves room for improvement when dealing with multi-modal data. Suppose there are two signal sources X and Y, if they have a correlation, it is always better to compress X and Y (or even more) jointly. This encourages us to leave from conventional single-modal data coding and advance toward multi-modal data coding. Fig. 3 shows an example of such multi-modal data acquisition situation.

Conclusion

In this article, we overviewed the long history of video coding technology and introduced the state-of-the-art performance. Then we have extended the compression target from conventional video to future omni-ambient data, and highlighted that multi-modal coding technique shall gain more importance in future IoT era. It is expected more research efforts will be contributed to this area, and organizing such extravagant amount of data will open up new types of applications.

References

- Cisco Visual Networking Index: Forecast and Trends, 2017–2022 White Paper

- M. Lauri, “Mobile Videophone”, Proc. Research Seminar on Telecommunications Business, Helsinki University of Technology, pp. 37-41, Spring 2007

- ISO/IEC 23090-3, “Information technology — Coded representation of immersive media — Part 3: Versatile video coding”, July 2020.

- A. Wieckowskielal.,“Towards a Live Software Decoder Implementation for the Upcoming Versatile Video Coding (VVC) Codec,” Proc. IEEE ICIP 2020, Oct. 2020

- S. Takamura, “Data-coding Approaches for Organizing Omni-ambient Data,” NTT Technical Review, Vol. 17, No. 12, pp. 28-34, Dec. 2019

Seishi Takamura received the B.E., M.E. and Ph.D. degrees from the University of Tokyo in 1991, 1993 and 1996, respectively. In 1996 he joined Nippon Telegraph and Telephone (NTT) Corporation, where he has been engaged in research on efficient video coding and ultra-high quality video coding. He has fulfilled various duties in the research and academic community in current and prior roles including Associate Editor of IEEE Transactions on Circuits and Systems for Video Technology, Executive Committee Member of IEEE Tokyo Section, Japan Council and Region 10 (Asia-Pacific). He has also served as Chair of ISO/IEC JTC 1/SC 29 Japan National Body, Japan Head of Delegation of ISO/IEC JTC 1/SC 29, and as an International Steering Committee Member of the Picture Coding Symposium. From 2005 to 2006, he was a Visiting Scientist at Stanford University, California, USA. He is currently a Senior Distinguished Engineer at NTT Media Intelligence Laboratories. Dr. Takamura is a Fellow of IEEE and IEICE, a senior member of IPSJ, and a member of MENSA, APSIPA, SID and ITE.